A Hugging Face model a day

Let me begin by saying my interaction with hugging face has been almost null since trying out PCA models for a Deep Learning Course.

The motivation behind this post comes after knowing HF has ways to automate most of the things I do day by day, and small nice-to-have activities that would make my life marginally better.

Why waste time building out stuff when people have spent months or years mantaining models in (near) good health?

The idea is to have a one stop place where I can record:

What each model is for. Was it useful in solving what I needed. How easy/difficult was it to set up – I have no groundbreaking code skills: if I can set it up, so can you.

Today 2 models: transcribing audio and image generation using training on specific features.

Whisper.ccp: Transcribing

The model that sparked this idea. Whisper supports locally transcribing, no upload limit, and is fully free to use.

My main need here was transcribing a 1.45 hour interview with two people and no background noise. The tricky thing is the interview was done in Argentinian Spanish and the main speaker used a lot of gestures, meaning transcribing the audio also requires some flexibility around understanding that phrases accompannying gestures are not necessarily easy to transcribe.

I manually downloaded pre-converted models from here.

#Preconverted model used

curl -L -o models/ggml-base.bin https://ggml.ggerganov.com/ggml-model-whisper-base.bin

set up

brew install cmake

cmake --version

#

cmake -B build

if done: [100%] Built target bench

One small set up needed is Whisper does not support .m4a files. It only supports .wav, .mp3, .flac, and raw PCM formats. You will need to convert it into .wav first.

# is your file in wav? if so convert

you might need: brew install ffmpeg

ffmpeg -i ~/Desktop/name.m4a ~/Desktop/name.wav

model include in -f the name of your audio ie. ~/Desktop/name

output saves your transcript outside of the terminal

./build/bin/whisper-cli -m models/ggml-base.bin -l es -f your-spanish-audio.wav --output-txtIn case you need to transcribe and translate it back into another language you can also do so by adding --translate before -l. Next up you will see a stream of transcription in your terminal which will then save as a txt file.

If you can’t be bothered to look into each transcription, you can just throw this into Chat GPT 4.0 explain basic ideas that were talked about and gather your main takeaways.

Difficulty: Low. Restrictions: I’ve been told that if ran it from an Intel chip, it requires more set up and can be less accessible. The experience above is run on Apple Silicon M3. I expect the experience is even better when transcribing in Spain’s Spanish or English. Would use again and recommend: 10/10 experience.

CryptoGAN: making punk pfps

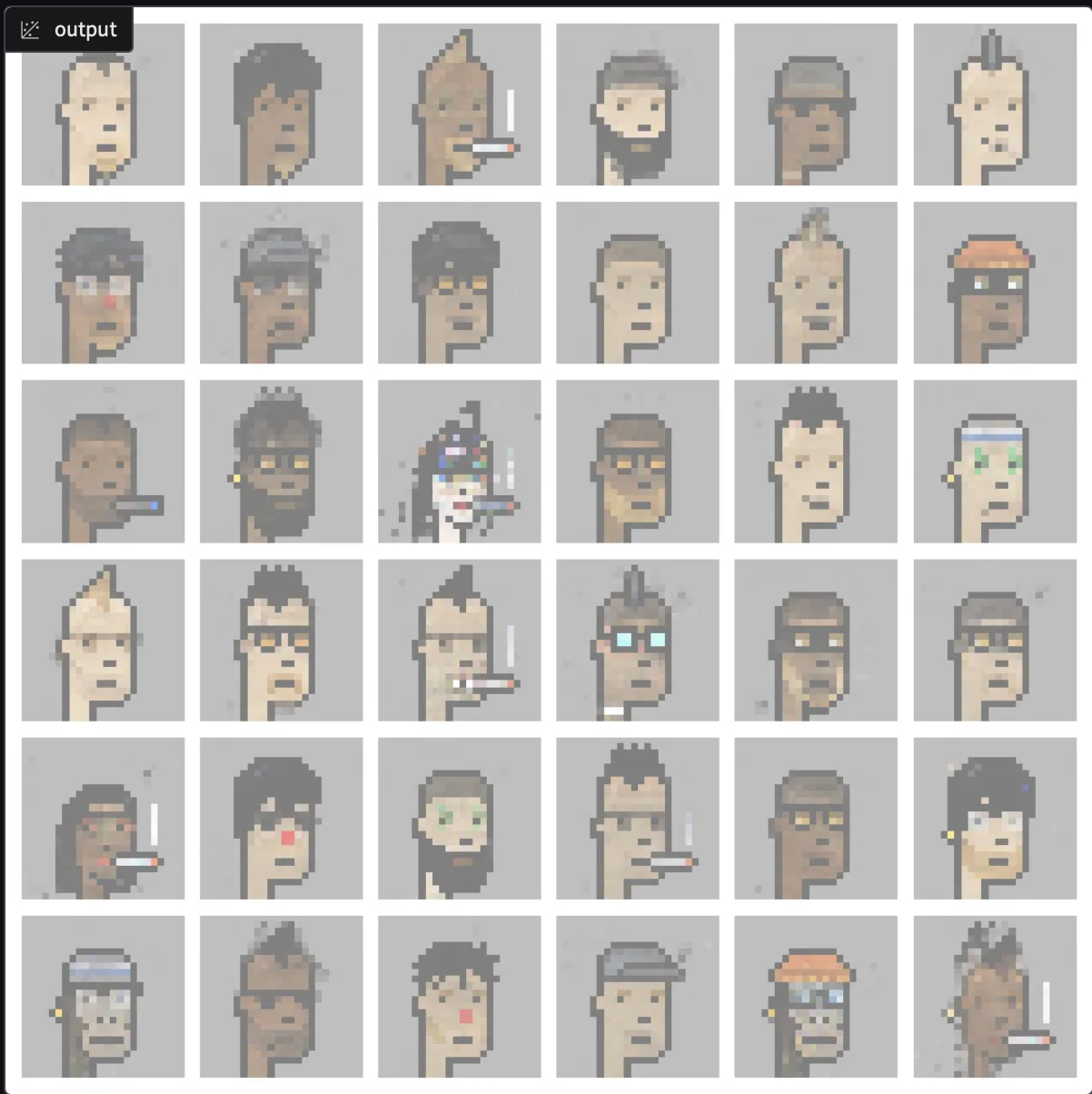

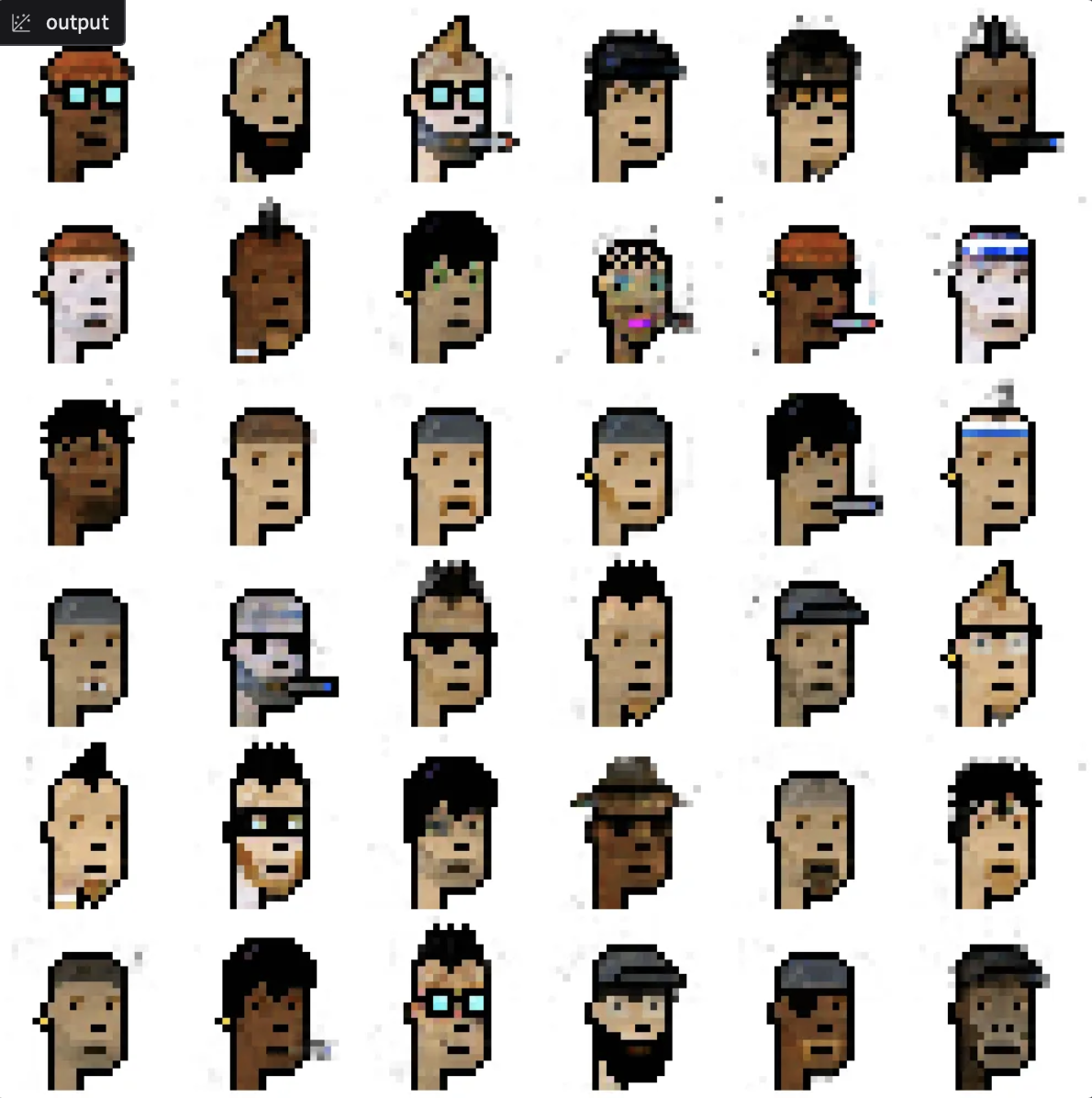

CryptoGAN is a lightweight GAN interface that lets you generate new pixel-art-style punk profile based on the original CryptoPunks dataset.

It builds on SNGAN. Whenever you see SNGAN, it builds from "Spectral Normalization for Generative Adversarial Networks" by Takeru Miyato, Toshiki Kataoka, Masanori Koyama, Yuichi Yoshida. Spectral normalization “stabilizes training,” the high-level idea is it helps ensure that the discriminator doesn't overpower the generator, leading to better image quality

This was not easy at all. And I wouldn’t say I’ve achieved what I set out to do either. My idea was to obtain 100% clear quality punk from the generator, or a grid filled with a set of entirely new punks. Like the originals, but iterating on their design.

Step 1 — Download fails

I started by trying to download the full CryptoPunks image dataset from cryptopunks.app, but quickly hit rate limits (HTTP 429). Turns out, scraping 10,000 images gets you cut off pretty fast. Introducing sleep limits and delays did not work either.

Instead I pivoted to the huggingnft/cryptopunks dataset on Hugging Face. This gave me clean, ready-to-use PNGs of all punks. I wrote a script to extract them into a local data/images/ folder. Success.

Step 2 — Training from scratch: fails, the sequel.

The original cryptogans repo claims to support training (train.py), but no script exists. Now, building a train script was not the idea. So I gave up on retraining the model and decided to just use the pretrained generator instead – not the coolest process.

Step 3 — Loading the pretrained model.

The repository uses from_pretrained_keras() — which broke immediately because Keras sucks because of a compatibility issue. Some painful debugging later, I learned that I needed to load the model using TFSMLayer()— the only way to load old SavedModels in Keras 3.

Step 4 — Gradio: deprecated code everywhere.

Once the model loaded, the Gradio app didn’t work. All examples used functions which were removed in Gradio 4x. I had to rewrite the interface using gr.Slider() and gr.Image() directly.

When the app finally ran, the output was washed out and blurry.

Not punk at all, sad ghosts at best.

I had two bugs here:

I was rescaling the images with (x + 1) / 2 even though the generator had already postprocessed them.

I was using matplotlib’s default interpolation, which blurred the 24×24 pixel art.

Step 5 — Fixing image clarity: Interpolation.

This was faster. The output tensor made the model return a dictionary with a "postprocess" key. Once I extracted that, clipped the values to [0, 1], converted them to uint8, and rendered them using interpolation="nearest", the punks looked punkier.

Also notice how the generated cigar introduced too much noise in the image. Some punks had half a cigar or smoke around them. No matter how many iterations the model seemed to skew against sepcial features like the pipes, and no matter how many iterations long hair punks were never present.

Difficulty: Mid for mid results

Restrictions: Rate limits on scrapping were the first obstacel. This could be tried again with higher sleep intervals, to scrape the og files. Taking the pretrained generator was ok, my idea was to see to training myself as the dataset is not that large.

- Low utility. Would not use again and not recommend.